Workflow of Other Datasets#

Welcome! This will cover a default workflow from logging data to preparing for training.

Note

This workflow covers the DatasetForTextClassification, DatasetForTokenClassification, and DatasetForText2Text. The workflow for FeedbackDataset can be found here. Not sure which dataset to use? Check out our section on choosing a dataset.

Install Libraries#

Install the latest version of Argilla in Colab, along with other libraries and models used in this notebook.

[ ]:

!pip install argilla datasets transformers evaluate spacy-transformers transformers[torch] requests

!python -m spacy download en_core_web_sm

Set Up Argilla#

If you have already deployed Argilla Server, then you can skip this step. Otherwise, you can quickly deploy it in two different ways:

You can deploy Argilla Server on HF Spaces.

Alternatively, if you want to run Argilla locally on your own computer, the easiest way to get Argilla UI up and running is to deploy on Docker:

docker run -d --name quickstart -p 6900:6900 argilla/argilla-quickstart:latest

More info on Installation here.

Connect to Argilla#

It is possible to connect to our Argilla instance by simply importing the Argilla library and using the environment variables and rg.init().

ARGILLA_API_URL: It is the url of the Argilla Server.If you’re using Docker, it is

http://localhost:6900by default.If you’re using HF Spaces, it is constructed as

https://[your-owner-name]-[your_space_name].hf.space.

ARGILLA_API_KEY: It is the API key of the Argilla Server. It isownerby default.HF_TOKEN: It is the Hugging Face API token. It is only needed if you’re using a private HF Space. You can configure it in your profile: Setting > Access Tokens.workspace: It is a “space” inside your Argilla instance where authorized users can collaborate. It’sargillaby default.

For more info about custom configurations like headers, workspace separation or access credentials, check our config page.

[1]:

import argilla as rg

from argilla._constants import DEFAULT_API_KEY

[2]:

# Argilla credentials

api_url = "http://localhost:6900" # "https://<YOUR-HF-SPACE>.hf.space"

api_key = DEFAULT_API_KEY # admin.apikey

# Huggingface credentials

hf_token = "hf_..."

[3]:

rg.init(api_url=api_url, api_key=api_key)

# # If you want to use your private HF Space

# rg.init(extra_headers={"Authorization": f"Bearer {hf_token}"})

C:\Users\sarah\Documents\argilla\src\argilla\client\client.py:154: UserWarning: Default user was detected and no workspace configuration was provided, so the default 'argilla' workspace will be used. If you want to setup another workspace, use the `rg.set_workspace` function or provide a different one on `rg.init`

warnings.warn(

Enable Telemetry#

We gain valuable insights from how you interact with our tutorials. To improve ourselves in offering you the most suitable content, using the following lines of code will help us understand that this tutorial is serving you effectively. Though this is entirely anonymous, you can choose to skip this step if you prefer. For more info, please check out the Telemetry page.

[ ]:

try:

from argilla.utils.telemetry import tutorial_running

tutorial_running()

except ImportError:

print("Telemetry is introduced in Argilla 1.20.0 and not found in the current installation. Skipping telemetry.")

Upload data#

The main component of the Argilla data model is called a record. Records can be of different types depending on the currently supported tasks:

TextClassificationRecordTokenClassificationRecordText2TextRecord

The most critical attributes of a record that are common to all types are:

text: The input text of the record (Required);annotation: Annotate your record in a task-specific manner (Optional);prediction: Add task-specific model predictions to the record (Optional);metadata: Add some arbitrary metadata to the record (Optional);

A Dataset in Argilla is a collection of records of the same type.

[ ]:

# Create a basic text classification record

textcat_record = rg.TextClassificationRecord(

text="Hello world, this is me!",

prediction=[("LABEL1", 0.8), ("LABEL2", 0.2)],

annotation="LABEL1",

multi_label=False,

)

# Create a basic token classification record

tokencat_record = rg.TokenClassificationRecord(

text="Michael is a professor at Harvard",

tokens=["Michael", "is", "a", "professor", "at", "Harvard"],

prediction=[("NAME", 0, 7), ("LOC", 26, 33)],

)

# Create a basic text2text record

text2text_record = rg.Text2TextRecord(

text="My name is Sarah and I love my dog.",

prediction=["Je m'appelle Sarah et j'aime mon chien."],

)

# Upload (log) the records to corresponding datasets in the Argilla web app

rg.log(textcat_record, "my_textcat_dataset")

rg.log(tokencat_record, "my_tokencat_dataset")

rg.log(tokencat_record, "my_text2text_dataset")

Now you can access your datasets in the Argilla web app and look at your first records.

However, most of the time, you will have your data in some file format, like TXT, CSV, or JSON. Argilla relies on two well-known Python libraries to read these files: pandas and datasets. After reading the files with one of those libraries, Argilla provides shortcuts to create your records automatically. Make sure to match the column names with the required attributes of the record type you want to create.

# Using a pandas dataframe

dataset_rg = rg.read_pandas(dataframe, task="TextClassification")

# Using a Dataset

dataset_rg = rg.read_datasets(dataset, task="TokenClassification")

As mentioned earlier, you choose the record type depending on the task you want to tackle.

1. TextClassification#

In our example, we’re going to work with a section of the IMDb dataset available on Hugging Face. The underlying task here could be to classify the reviews by their sentiment.

[75]:

from datasets import load_dataset

dataset = load_dataset("imdb", split="train").shuffle(seed=42).select(range(100))

[36]:

dataset[0]

[36]:

{'text': 'There is no relation at all between Fortier and Profiler but the fact that both are police series about violent crimes. Profiler looks crispy, Fortier looks classic. Profiler plots are quite simple. Fortier\'s plot are far more complicated... Fortier looks more like Prime Suspect, if we have to spot similarities... The main character is weak and weirdo, but have "clairvoyance". People like to compare, to judge, to evaluate. How about just enjoying? Funny thing too, people writing Fortier looks American but, on the other hand, arguing they prefer American series (!!!). Maybe it\'s the language, or the spirit, but I think this series is more English than American. By the way, the actors are really good and funny. The acting is not superficial at all...',

'label': 1}

As we can see, the dataset has two columns: text and label. We will use the label as the annotation of our record. Thus, to match the required attributes of a TextClassificationRecord, we need to rename the columns.

[76]:

dataset = dataset.rename_column("label", "annotation")

Now, we can inspect our dataset.

[77]:

dataset.select(range(3)).to_pandas()

[77]:

| text | annotation | |

|---|---|---|

| 0 | There is no relation at all between Fortier an... | 1 |

| 1 | This movie is a great. The plot is very true t... | 1 |

| 2 | George P. Cosmatos' "Rambo: First Blood Part I... | 0 |

Once, we checked that everything is correct, we can convert it to an Argilla dataset.

[ ]:

dataset_rg = rg.read_datasets(dataset, task="TextClassification")

We will upload this dataset to the web app and give it the name imdb

[ ]:

rg.log(dataset_rg, "imdb")

You can configure labels programmatically by using configure_dataset_settings method:

labels = ["pos", "neg"]

settings = rg.TextClassificationSettings(label_schema=labels)

rg.configure_dataset_settings(name="imdb", settings=settings)

2. TokenClassification#

We will use the ag_news from Hugging Face for this example. The underlying task here could be to extract the places and people involved in the events described in the headlines.

So, we will start by loading the dataset and analyzing it.

[78]:

from datasets import load_dataset

dataset = load_dataset("ag_news", split="train").shuffle(seed=50).select(range(100))

[79]:

# The best way to visualize a Dataset is actually via pandas

dataset.select(range(3)).to_pandas()

[79]:

| text | label | |

|---|---|---|

| 0 | Bills' Milloy Ready to Make Season Debut (AP) ... | 1 |

| 1 | MLB: Atlanta 6, Houston 5 JD Drew extended Atl... | 1 |

| 2 | PARMALAT: FT, BONDI WANTS 1 BLN DOLLARS FROM I... | 2 |

As the label is not needed in this case, we will add it as metadata.

[ ]:

def metadata_to_dict(row):

metadata = {}

metadata["label"] = row["label"]

row['metadata'] = metadata

return row

dataset = dataset.map(metadata_to_dict, remove_columns=["label"])

In contrast to the other types, token classification records need the input text and the corresponding tokens. So let us tokenize our input text in a small helper function and add the tokens to a new column called tokens.

Note

We will use spaCy to tokenize the text, but you can use whatever library you prefer.

[ ]:

import spacy

# Load a english spaCy model to tokenize our text

nlp = spacy.load("en_core_web_sm")

# Define our tokenize function

def tokenize(row):

tokens = [token.text for token in nlp(row["text"])]

return {"tokens": tokens}

# Map the tokenize function to our dataset

dataset = dataset.map(tokenize)

Let us have a quick look at our extended Dataset:

[82]:

dataset.select(range(3)).to_pandas()

[82]:

| text | metadata | tokens | |

|---|---|---|---|

| 0 | Bills' Milloy Ready to Make Season Debut (AP) ... | {'label': 1} | [Bills, ', Milloy, Ready, to, Make, Season, De... |

| 1 | MLB: Atlanta 6, Houston 5 JD Drew extended Atl... | {'label': 1} | [MLB, :, Atlanta, 6, ,, Houston, 5, JD, Drew, ... |

| 2 | PARMALAT: FT, BONDI WANTS 1 BLN DOLLARS FROM I... | {'label': 2} | [PARMALAT, :, FT, ,, BONDI, WANTS, 1, BLN, DOL... |

We can now read this Dataset with Argilla, which will automatically create the records and put them in a Argilla Dataset.

[69]:

# Read Dataset into a Argilla Dataset

dataset_rg = rg.read_datasets(dataset, task="TokenClassification")

We will upload this dataset to the web app and give it the name ag_news.

[ ]:

# Log the dataset to the Argilla web app

rg.log(dataset_rg, "ag_news")

You can configure labels programmatically by using configure_dataset_settings method:

labels = ["PER", "ORG", "LOC", "MISC"]

settings = rg.TokenClassificationSettings(label_schema=labels)

rg.configure_dataset_settings(name="ag_news", settings=settings)

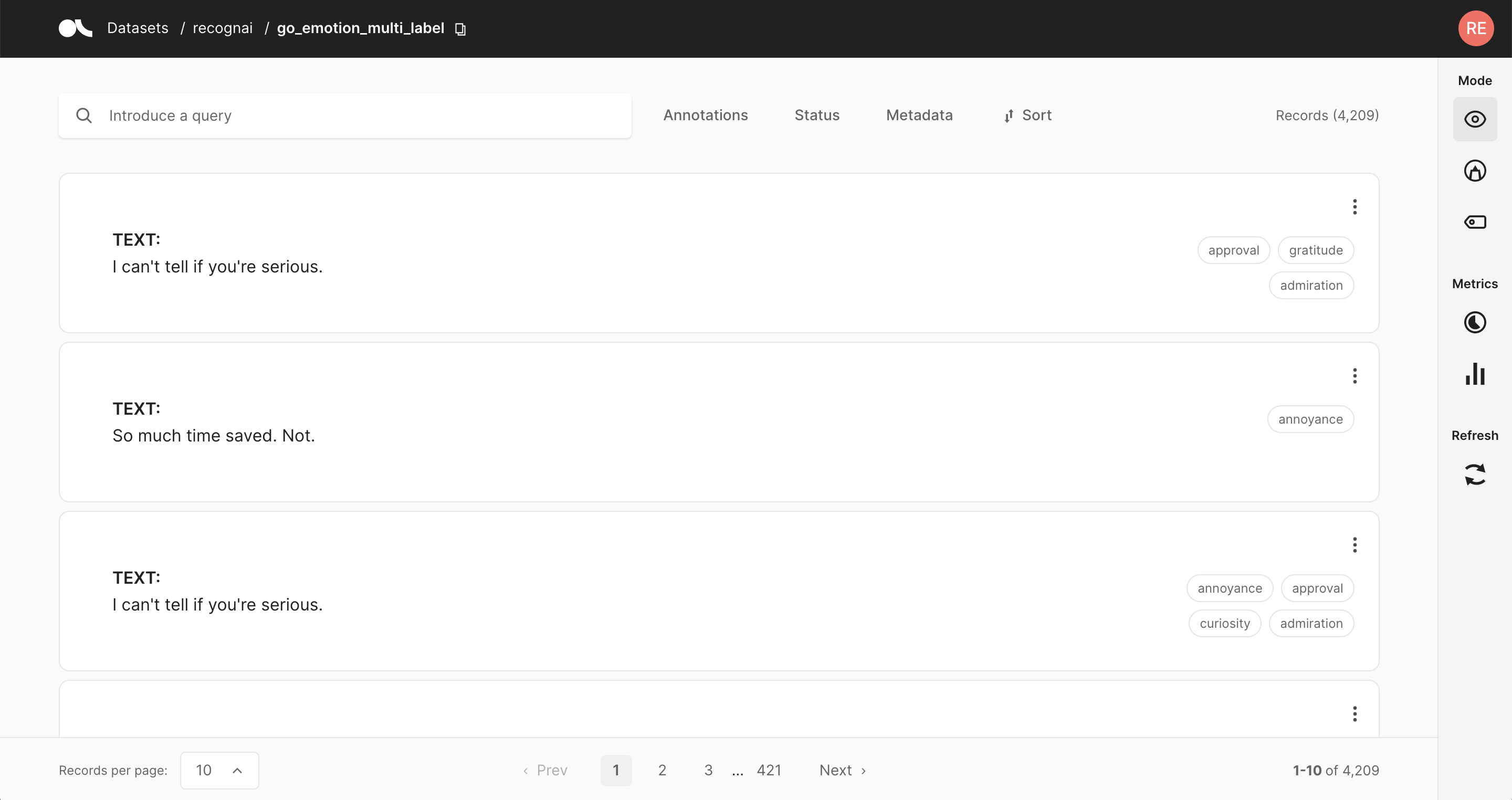

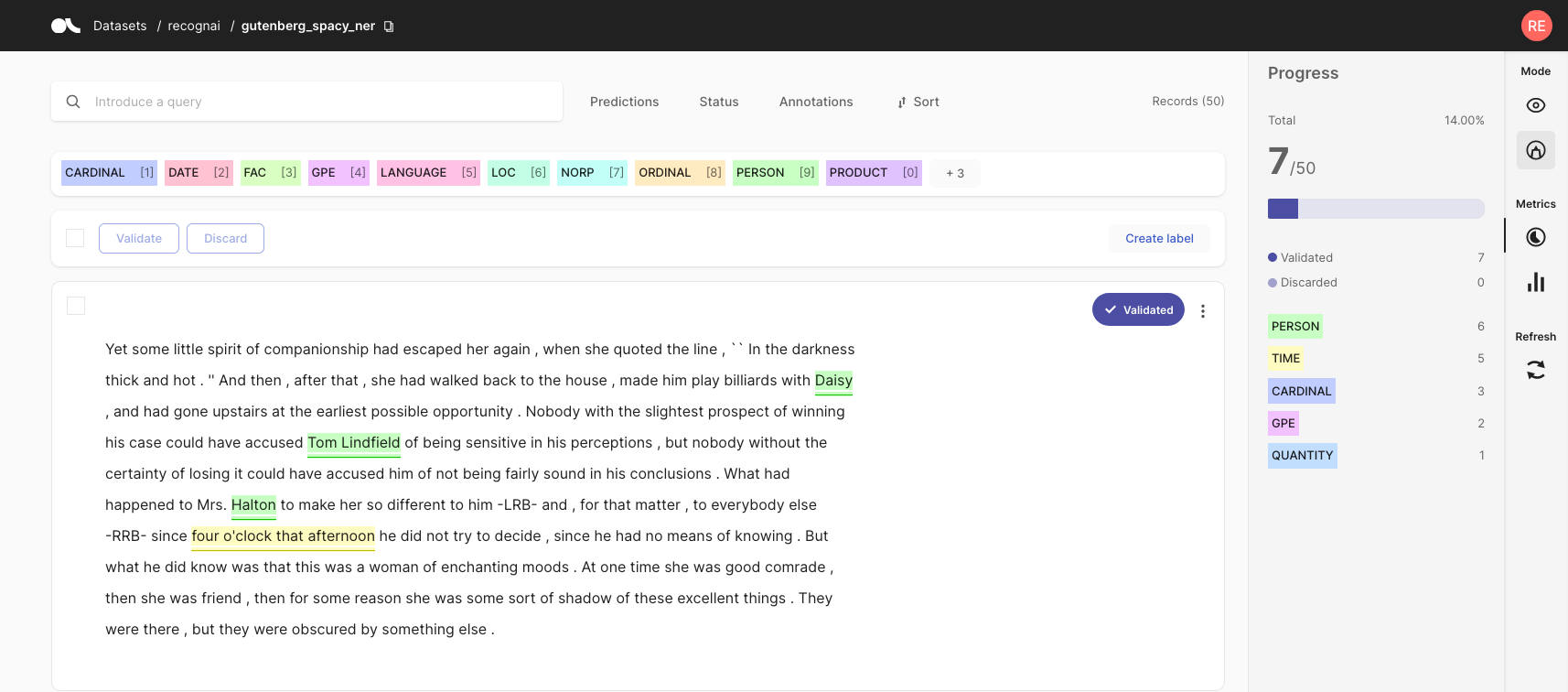

You can also create labels in Dataset:nbsphinx-math:`Settings` and start annotating:

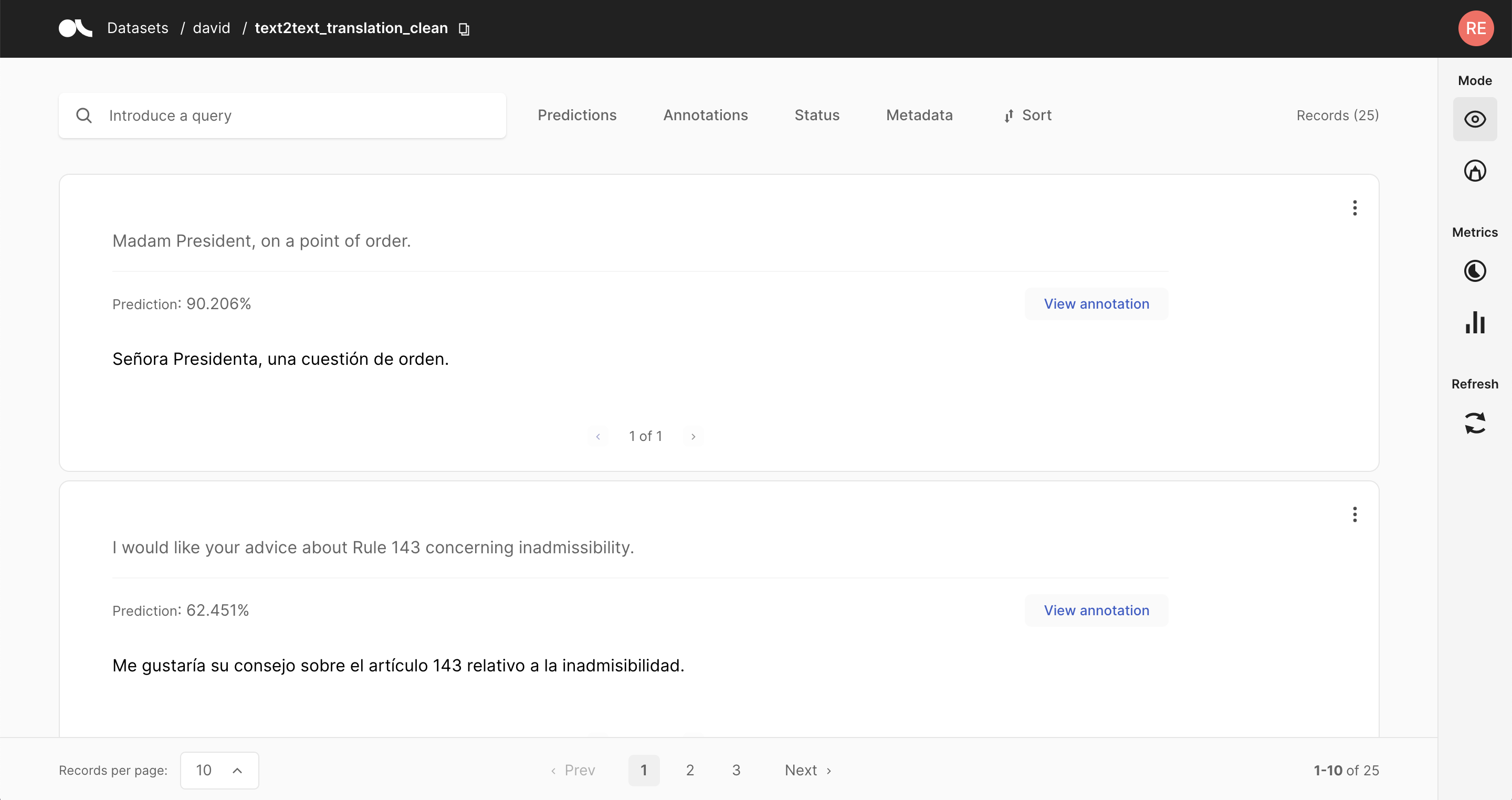

3. Text2Text#

In this example, we will use English sentences from the European Center for Disease Prevention and Control available at the Hugging Face Hub. The underlying task here could be to translate the sentences into other European languages.

Let us load the data with datasets from the Hub.

[83]:

from datasets import load_dataset

# Load the Dataset from the Hugging Face Hub and extract a subset of the train split as example

dataset = load_dataset("europa_ecdc_tm", "en2fr", split="train").shuffle(seed=30).select(range(100))

and have a quick look at the first row of the resulting dataset Dataset:

[ ]:

dataset[0]

{'translation': {'en': 'Vaccination against hepatitis C is not yet available.',

'fr': 'Aucune vaccination contre l’hépatite C n’est encore disponible.'}}

We can see that the English sentences are nested in a dictionary inside the translation column.

To extract English sentences into a new text column we will write a quick helper function and map the whole Dataset with it.

French sentences will be extracted into a new prediction column, wrapped in “[ ]”, as the prediction field of Text2TextRecord accepts a list of strings or tuples.

[ ]:

# Define our helper extract function

def extract(row):

return {"text": row["translation"]["en"], "prediction":[row["translation"]["fr"]]}

# Map the extract function to our dataset

dataset = dataset.map(extract, remove_columns = ["translation"])

Let us have a quick look at our extended Dataset:

[ ]:

dataset.select(range(3)).to_pandas()

| text | prediction | |

|---|---|---|

| 0 | Vaccination against hepatitis C is not yet ava... | [Aucune vaccination contre l’hépatite C n’est ... |

| 1 | HIV infection | [Infection à VIH] |

| 2 | The human immunodeficiency virus (HIV) remains... | [L’infection par le virus de l’immunodéficienc... |

We can now read this Dataset with Argilla, which will automatically create the records and put them in an Argilla Dataset.

[ ]:

# Read Dataset into a Argilla Dataset

dataset_rg = rg.read_datasets(dataset, task="Text2Text")

We will upload this dataset to the web app and give it the name ecdc_en

[ ]:

# Log the dataset to the Argilla web app

rg.log(dataset_rg, "ecdc_en")

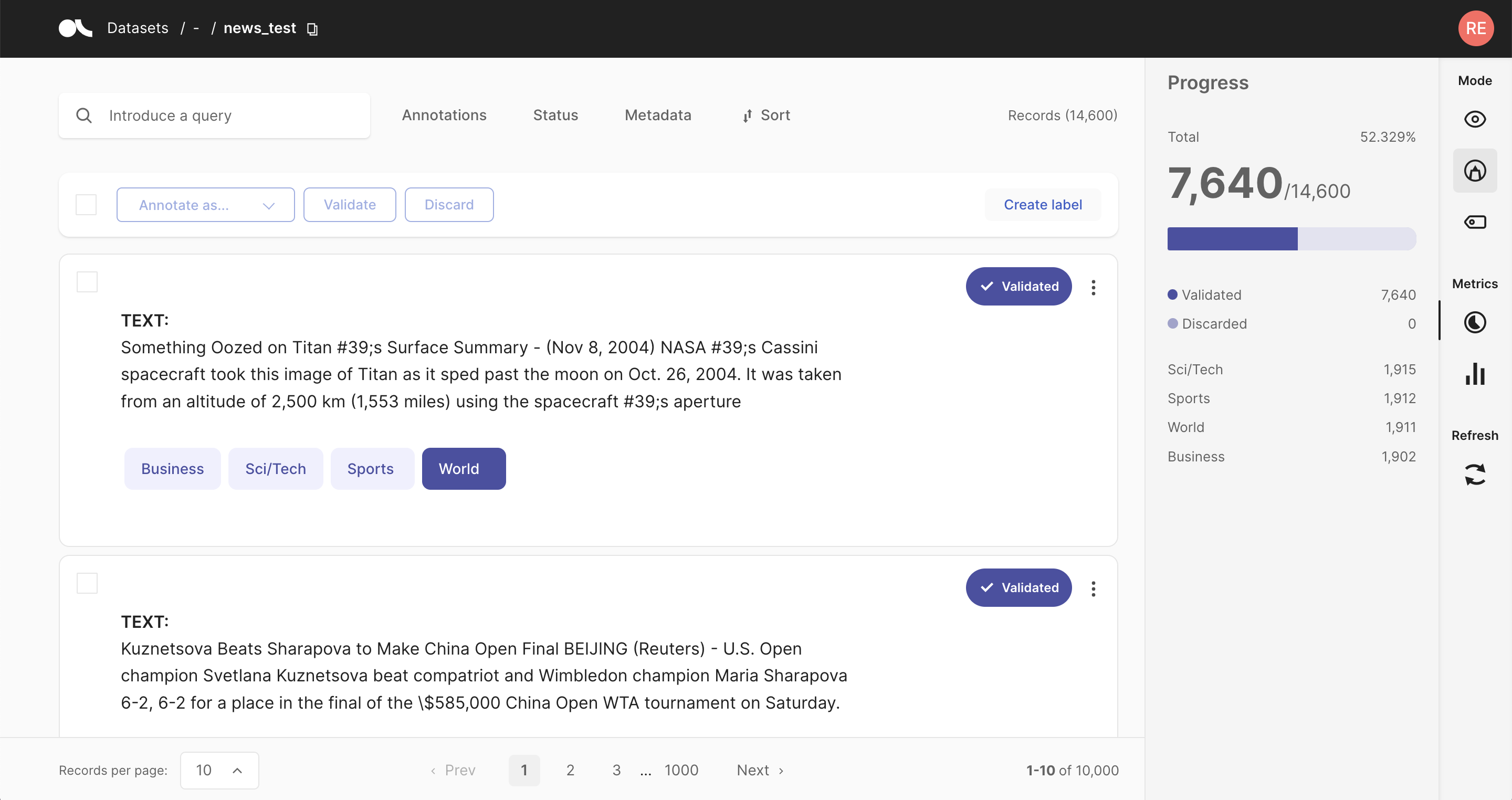

Label datasets#

Argilla provides several ways to label your data. Using Argilla’s UI, you can mix and match the following options:

Manually labeling each record using the specialized interface for each task type;

Leveraging a user-provided model and validating its predictions;

Defining heuristic rules to produce “noisy labels” which can then be combined with weak supervision;

Each way has its pros and cons, and the best match largely depends on your individual use case.

Annotation guideline#

Before starting the annotation process with a team, it is important to align the different truths everyone in the team thinks they have. Because the same text is going to be annotated by multiple annotators independently or we might want to revisit an old dataset later on. Besides a set of obvious mistakes, we also often encounter uncertain grey areas. Consider the following phrase for NER-annotation Harry Potter and the Prisoner of Azkaban can be interpreted in many ways. The entire phrase

is as the movie title, Harry Potter is a person, and Azkaban is a location. Maybe we don´t even want to annotate fictional locations and characters. Therefore, it is important to define these assumptions beforehand and iterate over them together with the team. Take a look at this blog from our friends over at suberb.ai or this

blog from Grammarly for more context.

1. Manual labeling#

The straightforward approach of manual annotations might be necessary if you do not have a suitable model for your use case or cannot come up with good heuristic rules for your dataset. It can also be a good approach if you dispose of a large annotation workforce or require few but unbiased and high-quality labels.

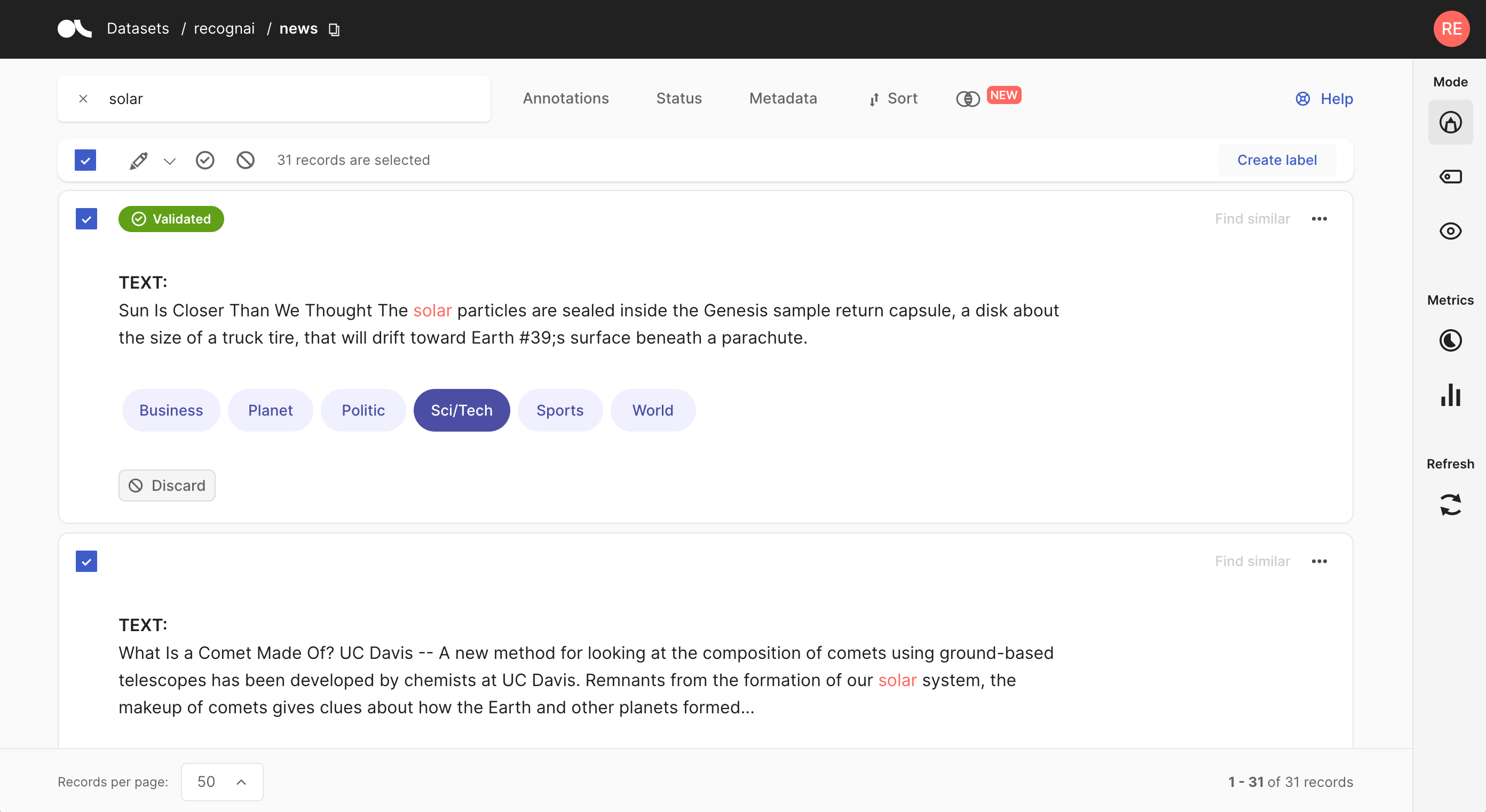

Argilla tries to make this relatively cumbersome approach as painless as possible. Via an intuitive and adaptive UI, its exhaustive search and filter functionalities, and bulk annotation capabilities, Argilla turns the manual annotation process into an efficient option.

Look at our dedicated feature reference for a detailed and illustrative guide on manually annotating your dataset with Argilla.

2. Validating predictions#

Nowadays, many pre-trained or zero-shot models are available online via model repositories like the Hugging Face Hub. Most of the time, you probably will find a model that already suits your specific dataset task to some degree. In Argilla, you can pre-annotate your data by including predictions from these models in your records. Assuming that the model works reasonably well on your dataset, you can filter for records with high prediction scores and validate the predictions. In this way, you will rapidly annotate part of your data and alleviate the annotation process.

One downside of this approach is that your annotations will be subject to the possible biases and mistakes of the pre-trained model. When guided by pre-trained models, it is common to see human annotators get influenced by them. Therefore, it is advisable to avoid pre-annotations when building a rigorous test set for the final model evaluation.

Check the introduction tutorial to learn to add predictions to the records. And our feature reference includes a detailed guide on validating predictions in the Argilla web app.

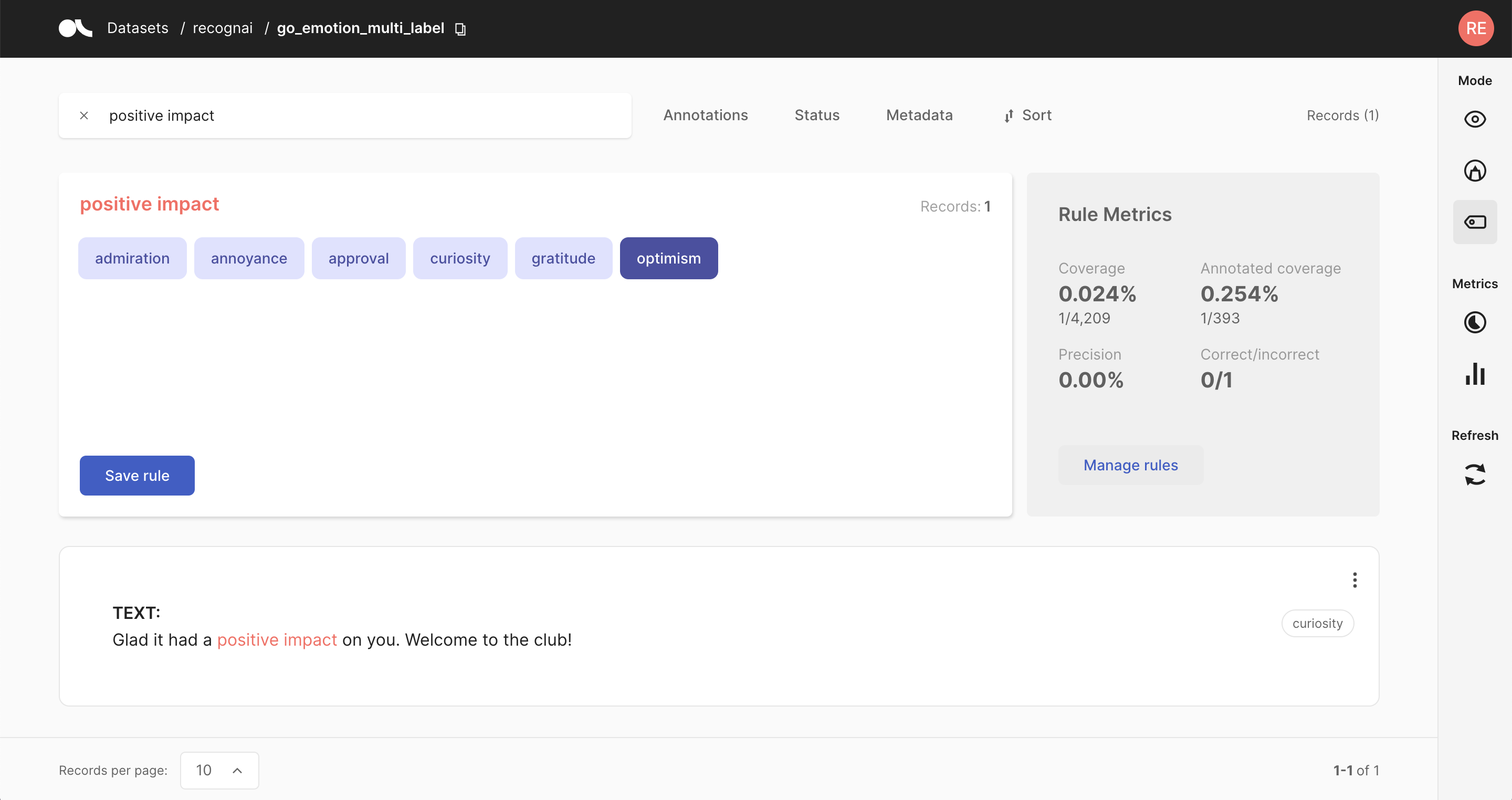

3. Weak labeling rules#

Another approach to annotating your data is to define heuristic rules tailored to your dataset. For example, let us assume you want to classify news articles into the categories of Finance, Sports, and Culture. In this case, a reasonable rule would be to label all articles that include the word “stock” as Finance.

Rules can get arbitrarily complex and can also include the record’s metadata. The downside of this approach is that it might be challenging to come up with working heuristic rules for some datasets. Furthermore, rules are rarely 100% precise and often conflict with each other. These noisy labels can be cleaned up using weak supervision and label models, or something as simple as majority voting. It is usually a trade-off between the amount of annotated data and the quality of the labels.

Check our guide for an extensive introduction to weak supervision with Argilla. Also, check the feature reference for the Define rules mode of the web app and our various tutorials to see practical examples of weak supervision workflows.

Train a model#

The ArgillaTrainer is a wrapper around many of our favorite NLP libraries. It provides a very intuitive abstract workflow to facilitate simple training workflows using decent default pre-set configurations without having to worry about any data transformations from Argilla. More info here.

[ ]:

from argilla.training import ArgillaTrainer

sentence = "I love this film, but the new remake is terrible."

trainer = ArgillaTrainer(

name="imdb",

workspace="argilla",

framework="spacy",

train_size=0.8

)

trainer.update_config(max_epochs=1, max_steps=1)

trainer.train(output_dir="my_easy_model")

records = trainer.predict(sentence, as_argilla_records=True)

# Print the prediction

print("\ntesting predictions...")

print(sentence)

print(f"Predicted_label: {records.prediction}")

Argilla helps you to create and curate training data. It is not a complete framework for training a model but we do provide integrations. You can use Argilla complementary with other excellent open-source frameworks that focus on developing and training NLP models.

Here we list three of the most commonly used open-source libraries, but many more are available and may be more suited for your specific use case:

transformers: This library provides thousands of pre-trained models for various NLP tasks and modalities. Its excellent documentation focuses on fine-tuning those models to your specific use case;

spaCy: This library also comes with pre-trained models built into a pipeline tackling multiple tasks simultaneously. Since it is a purely NLP library, it comes with many more NLP features than just model training;

spark-nlp: Spark NLP is an open-source text processing library for advanced natural language processing for the Python, Java and Scala programming languages. The library is built on top of Apache Spark and its Spark ML library.

scikit-learn: This de facto standard library is a powerful Swiss army knife for machine learning with some NLP support. Usually, their NLP models lack the performance when compared to transformers or spacy, but give it a try if you want to train a lightweight model quickly;