💨 Label data with semantic search and Sentence Transformers#

In this tutorial, you’ll learn to use Sentence Transformer embeddings and semantic search to make data labelling significantly faster. It will walk you through the following steps:

💾 use sentence transformers to generate embeddings of a dataset with banking customer requests

🙃 upload the dataset into Argilla for data labelling

🏷 use the similarity search feature to efficiently find an label bulks of semantically-related examples

Introduction#

In this tutorial, we’ll use the power of embeddings to make data labelling (and curation) more efficient. The idea of exploiting embeddings for labelling is not new, and there are several cool, standalone libraries to label data using embeddings.

Since 1.2.0, Argilla gives you a way to leverage embedding-based similarity together with all other workflows already provided: search-based bulk labelling, programmatic labelling using search queries, model pre-annotation, and human-in-the-loop workflows. This also means you can combine keyword search and filters with this new similarity search feature. All these without any vendor or model lock-in, you can use ANY embedding or encoding method, including but not limited to

Sentence Transformers, OpenAI, or Co:here. If you want a deep-dive, you can check the Semantic similarity deep-dive, but this tutorial will show you the basics to get started.

Let’s do it!

Running Argilla#

For this tutorial, you will need to have an Argilla server running. There are two main options for deploying and running Argilla:

Deploy Argilla on Hugging Face Spaces: If you want to run tutorials with external notebooks (e.g., Google Colab) and you have an account on Hugging Face, you can deploy Argilla on Spaces with a few clicks:

For details about configuring your deployment, check the official Hugging Face Hub guide.

Launch Argilla using Argilla’s quickstart Docker image: This is the recommended option if you want Argilla running on your local machine. Note that this option will only let you run the tutorial locally and not with an external notebook service.

For more information on deployment options, please check the Deployment section of the documentation.

Tip

This tutorial is a Jupyter Notebook. There are two options to run it:

Use the Open in Colab button at the top of this page. This option allows you to run the notebook directly on Google Colab. Don’t forget to change the runtime type to GPU for faster model training and inference.

Download the .ipynb file by clicking on the View source link at the top of the page. This option allows you to download the notebook and run it on your local machine or on a Jupyter notebook tool of your choice.

Setup#

For this tutorial, you’ll need Argilla’s Python client and a few third-party libraries that can be installed via pip:

[ ]:

%pip install argilla datasets==2.8.0 sentence-transformers==2.2.2 -qqq

Let’s import the Argilla module for reading and writing data:

[ ]:

import argilla as rg

If you are running Argilla using the Docker quickstart image or Hugging Face Spaces, you need to init the Argilla client with the URL and API_KEY:

[ ]:

# Replace api_url with the url to your HF Spaces URL if using Spaces

# Replace api_key if you configured a custom API key

rg.init(

api_url="http://localhost:6900",

api_key="admin.apikey"

)

Let’s add the imports we need:

[ ]:

from sentence_transformers import SentenceTransformer

from datasets import load_dataset

💾 Downloading and embedding your dataset#

The code below will load the banking customer requests dataset from the Hub, encode the text field, and create the vectors field which will contain only one key (mini-lm-sentence-transformers). For the purposes of labelling the dataset from scratch, it will also remove the label field, which contains original intent labels.

[ ]:

# Define fast version of sentence transformers

encoder = SentenceTransformer("all-MiniLM-L6-v2", device="cpu")

# Load dataset with banking

dataset = load_dataset("banking77", split="test")

# Encode text field using batched computation

dataset = dataset.map(

lambda batch: {"vectors": encoder.encode(batch["text"])},

batch_size=32,

batched=True

)

# Removes the original labels because you'll be labelling from scratch

dataset = dataset.remove_columns("label")

# Turn vectors into a dictionary

dataset = dataset.map(

lambda r: {"vectors": {"mini-lm-sentence-transformers": r["vectors"]}}

)

Our dataset now contains a vectors field with the embedding vector generated by the sentence transformer model.

[10]:

dataset.to_pandas().head()

[10]:

| text | vectors | |

|---|---|---|

| 0 | How do I locate my card? | {'mini-lm-sentence-transformers': [-0.01016708... |

| 1 | I still have not received my new card, I order... | {'mini-lm-sentence-transformers': [-0.04284123... |

| 2 | I ordered a card but it has not arrived. Help ... | {'mini-lm-sentence-transformers': [-0.03365558... |

| 3 | Is there a way to know when my card will arrive? | {'mini-lm-sentence-transformers': [0.012195908... |

| 4 | My card has not arrived yet. | {'mini-lm-sentence-transformers': [-0.04361863... |

🙃 Upload dataset into Argilla#

The original banking77 dataset is a intent classification dataset with dozens of labels (lost_card, card_arrival, etc.). To keep this tutorial simple, we define a simplified labelling scheme with higher level classes: ["change_details", "card", "atm", "top_up", "balance", "transfer", "exchange_rate", "pin"].

Let’s define the dataset settings, configure the dataset, and upload our dataset with vectors.

[ ]:

rg_ds = rg.DatasetForTextClassification.from_datasets(dataset)

# Our labelling scheme

settings = rg.TextClassificationSettings(

label_schema=["change_details", "card", "atm", "top_up", "balance", "transfer", "exchange_rate", "pin"]

)

rg.configure_dataset(name="banking77-topics", settings=settings)

rg.log(

name="banking77-topics",

records=rg_ds,

chunk_size=50,

)

🏷 Bulk labelling with the find similar action#

Now that our banking77-topics is available from the Argilla UI. We can start annotating our data leveraging semantic similarity search. The workflow is following:

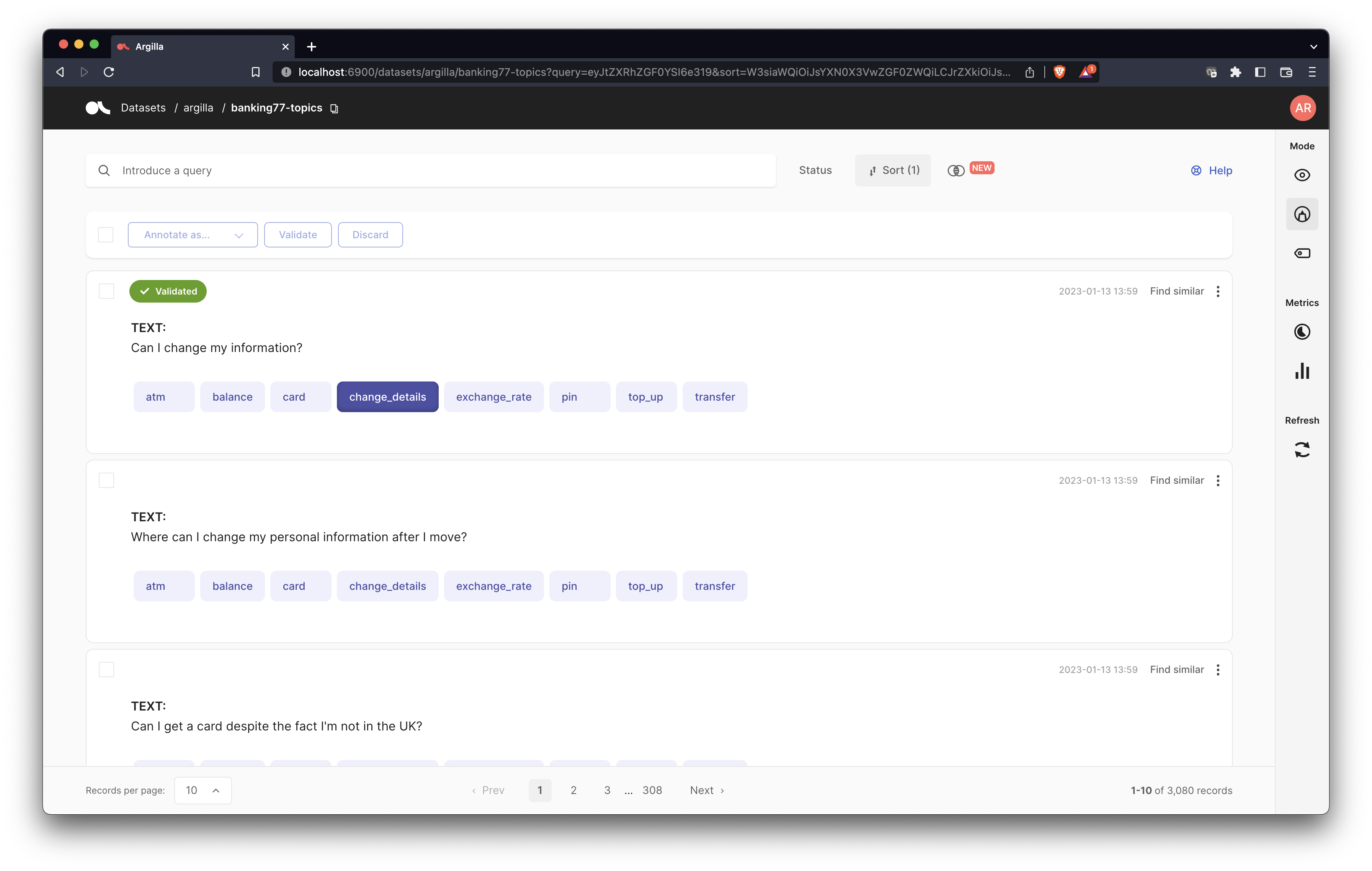

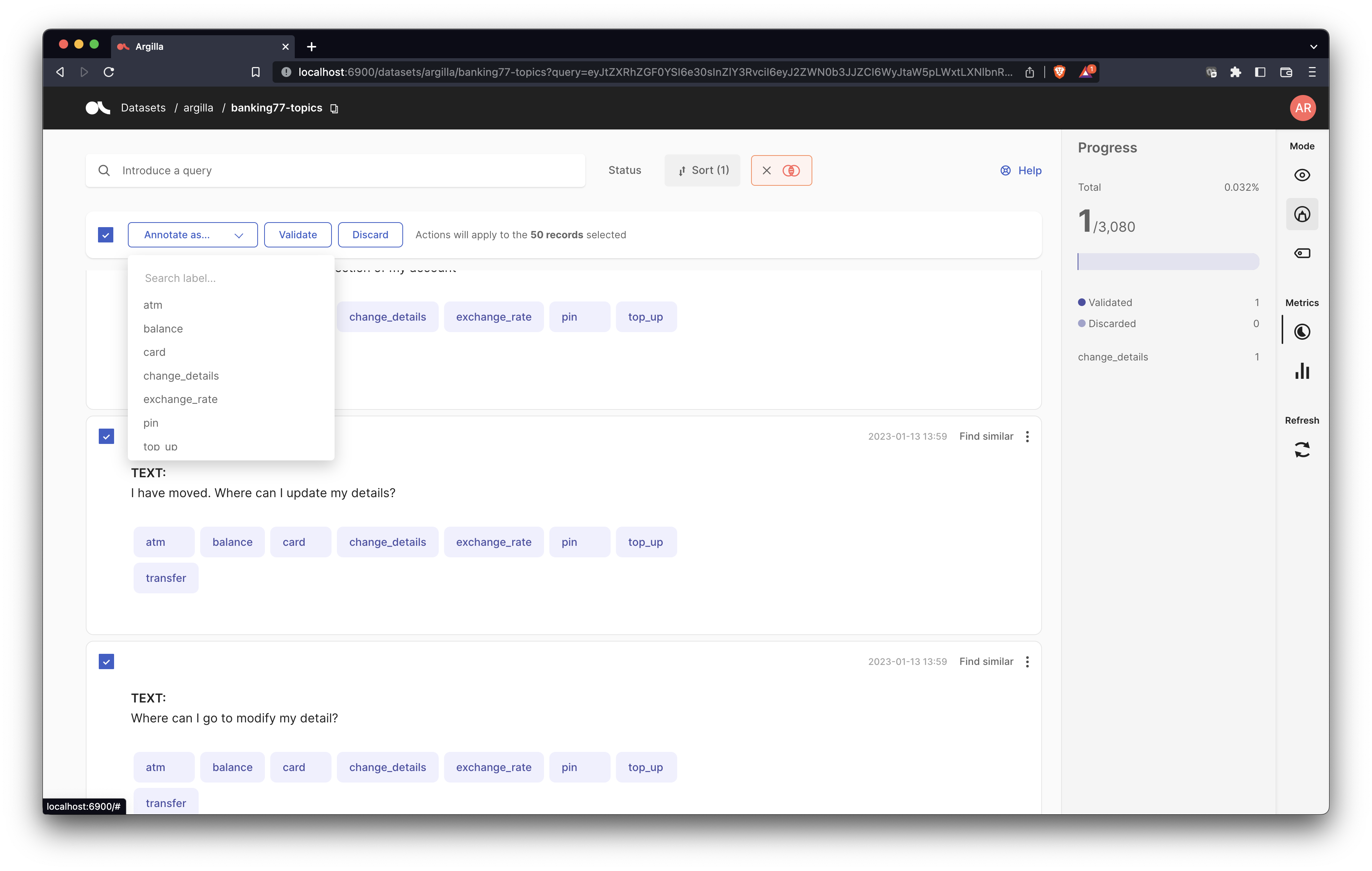

Label a record (e.g., “Change my information” with the label

change_details) and then click on Find similar on the top-right of your record.As a result, you’ll get to a list of the most similar record sorted by similarity (on descending order).

You can now review the records and assign either the

change_detailslabel or any other. For our use case, we see that most of the suggested records fall into the same category.

Let’s see it step-by-step:

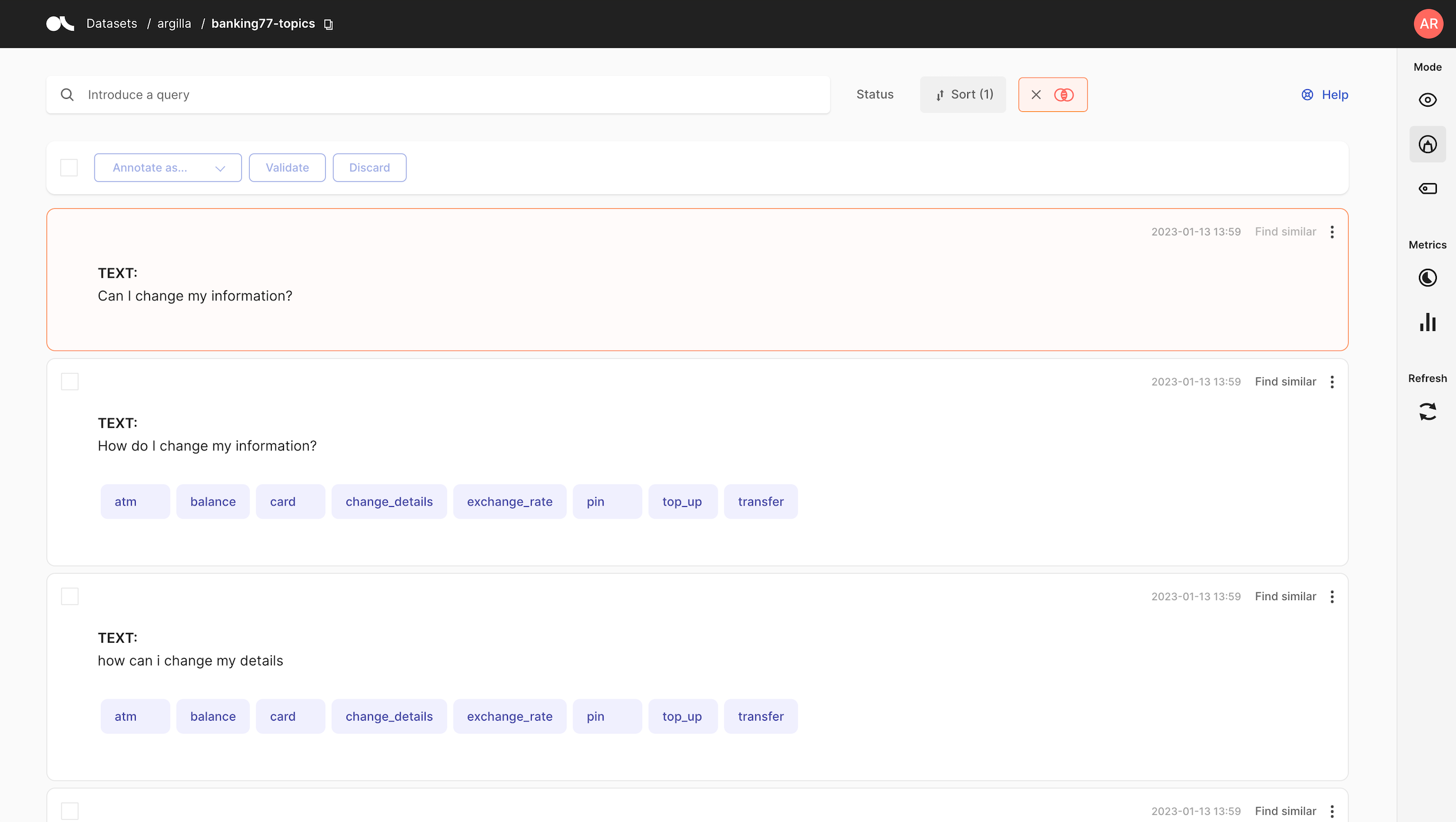

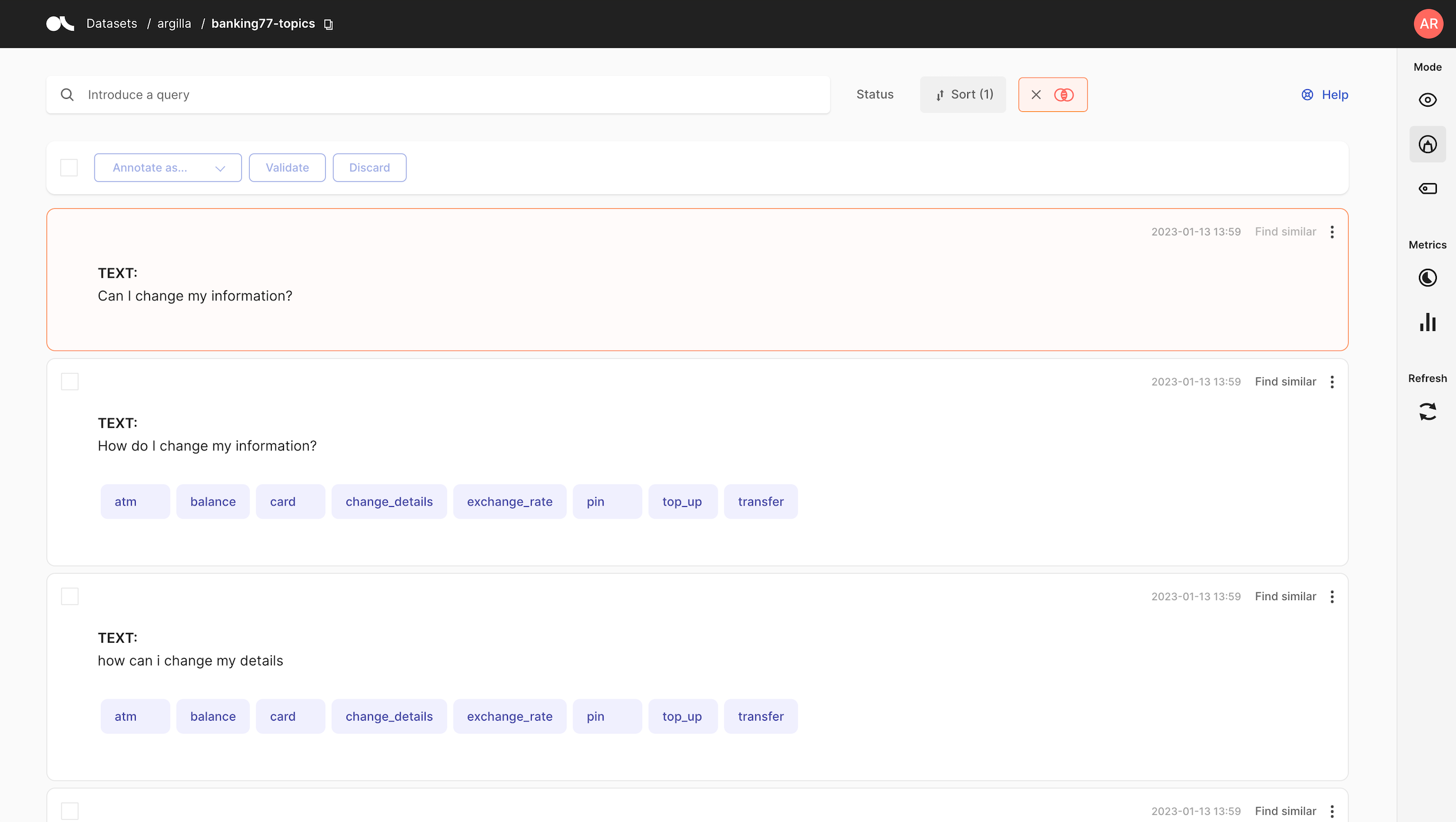

Label a record#

Using the hand-labelling mode, you can label a record like the one below:

Now if you want to find semantically similar or even duplicates of this record you can use the Find similar button.

Find similar#

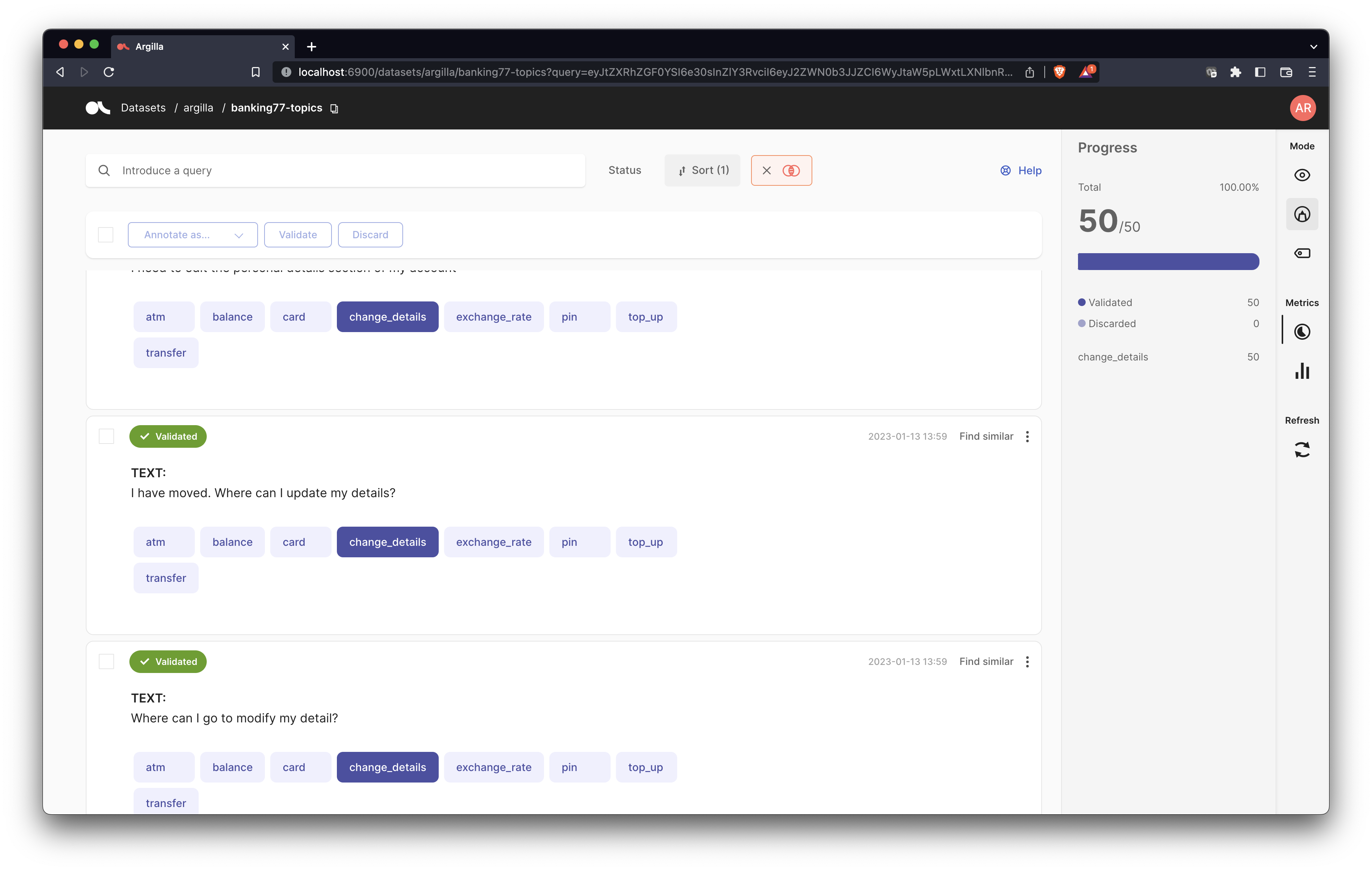

As a result you’ll get a list the 50 most similar records.

Note

Remember that you can combine this similarity search with the other search features: keywords, the query string DSL, and filters. If you have filters enabled for example, the find similar action will return the most similar records from the subset of records with the filter enabled.

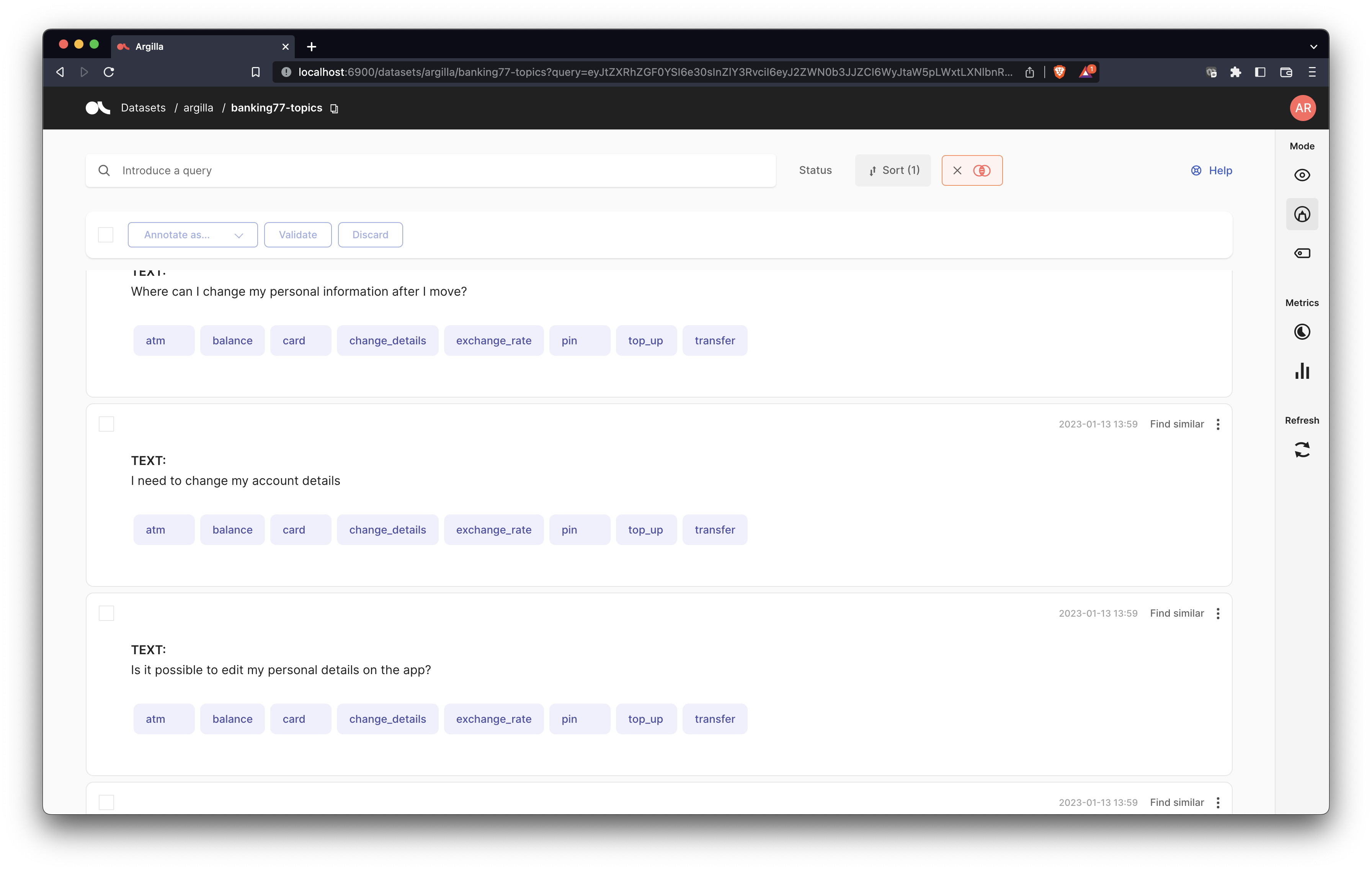

As you can see the model is effectively capturing similar meaning without the need of explicit shared words: e.g., details vs information.

Review records#

At this point, you can label the records one by one or scroll-down to review them before using the bulk-labelling button on the top of the records list.

Bulk label#

For this tutorial, our labels are sufficiently fine-grained for the embeddings to group records that fall under the same topic. So in this case, it is safe to use the bulk labelling feature directly, effectively labelling 50 semantically-similar examples after a quick revision.

Warning

For other use cases, you might need to be more careful and combine this feature with search queries and filters. For quick experimentation, you can also assume you’ll make some labelling errors and then use tools like cleanlab for detecting label errors.

Summary#

In this tutorial, you learned to use similarity search for data labelling with Argilla by using Sentence Transformers to embed your raw data.

Next steps#

If you want to continue learning Argilla:

🙋♀️ Join the Argilla Slack community!

⭐ Argilla Github repo to stay updated.

📚 Argilla documentation for more guides and tutorials.