Monitoring LangChain apps#

This guide explains how to use the ArgillaCallbackHandler to integrate Argilla with LangChain apps. With this integration, Argilla can be used evaluate and fine-tune LLMs. It works by collecting the interactions with LLMs and pushing them into a FeedbackDataset for continuous monitoring and human feedback. You just need to create a Langchain-compatible FeedbackDataset in Argilla and then instantiate the ArgillaCallbackHandler to be provided to LangChain LLMs, Chains, and/or Agents.

How to create a LangChain-compatible FeedbackDataset#

Due to the way LangChain callbacks and FeedbackDatasets work, we need to create a FeedbackDataset in Argilla with a certain structure for the fields, while the questions and the guidelines remain open and can be defined by the user.

The FeedbackDataset needs to have the following fields: prompt and response; the prompt field is the one that will be used to provide the input to the LLMs, while the response field is the one that will be used to collect the output of the LLMs.

Then, regarding the questions and the guidelines, the user is free to define them as they wish, as they will not be used by the ArgillaCallbackHandler to collect the data generated by the LLMs, but they will be used to annotate the FeedbackDataset.

Here’s an example of how to create a FeedbackDataset in Argilla that can be used with ArgillaCallbackHandler:

import argilla as rg

rg.init(

api_url="...",

api_key="..."

)

dataset = rg.FeedbackDataset(

fields=[

rg.TextField(name="prompt", required=True),

rg.TextField(name="response", required=True)

],

questions=[

rg.RatingQuestion(

name="response-rating",

description="How would you rate the quality of the response?",

values=[1, 2, 3, 4, 5],

required=True,

),

rg.TextQuestion(

name="response-correction",

description="If you think the response is not accurate, please, correct it.",

required=False,

),

],

guidelines="Please, read the questions carefully and try to answer it as accurately as possible.",

)

Then you’ll need to push that FeedbackDataset to Argilla as follows, otherwise, the ArgillaCallbackHandler won’t work.

dataset.push_to_argilla("langchain-dataset")

For more information on how to create a FeedbackDataset, please refer to the Create a Feedback Dataset guide.

How to use ArgillaCallbackHandler#

As all the LangChain callbacks, those are instantiated and provided to the LangChain LLMs, Chains, and/or Agents, and then there’s no need to worry about them anymore, as those will automatically keep track of everything taking place in the LangChain pipeline. In this case, we’re keeping track of both the input and the final response provided by the LLMs, Chains, and/or Agents.

from langchain.callbacks import ArgillaCallbackHandler

argilla_callback = ArgillaCallbackHandler(

dataset_name="langchain-dataset",

api_url="...",

api_key="...",

)

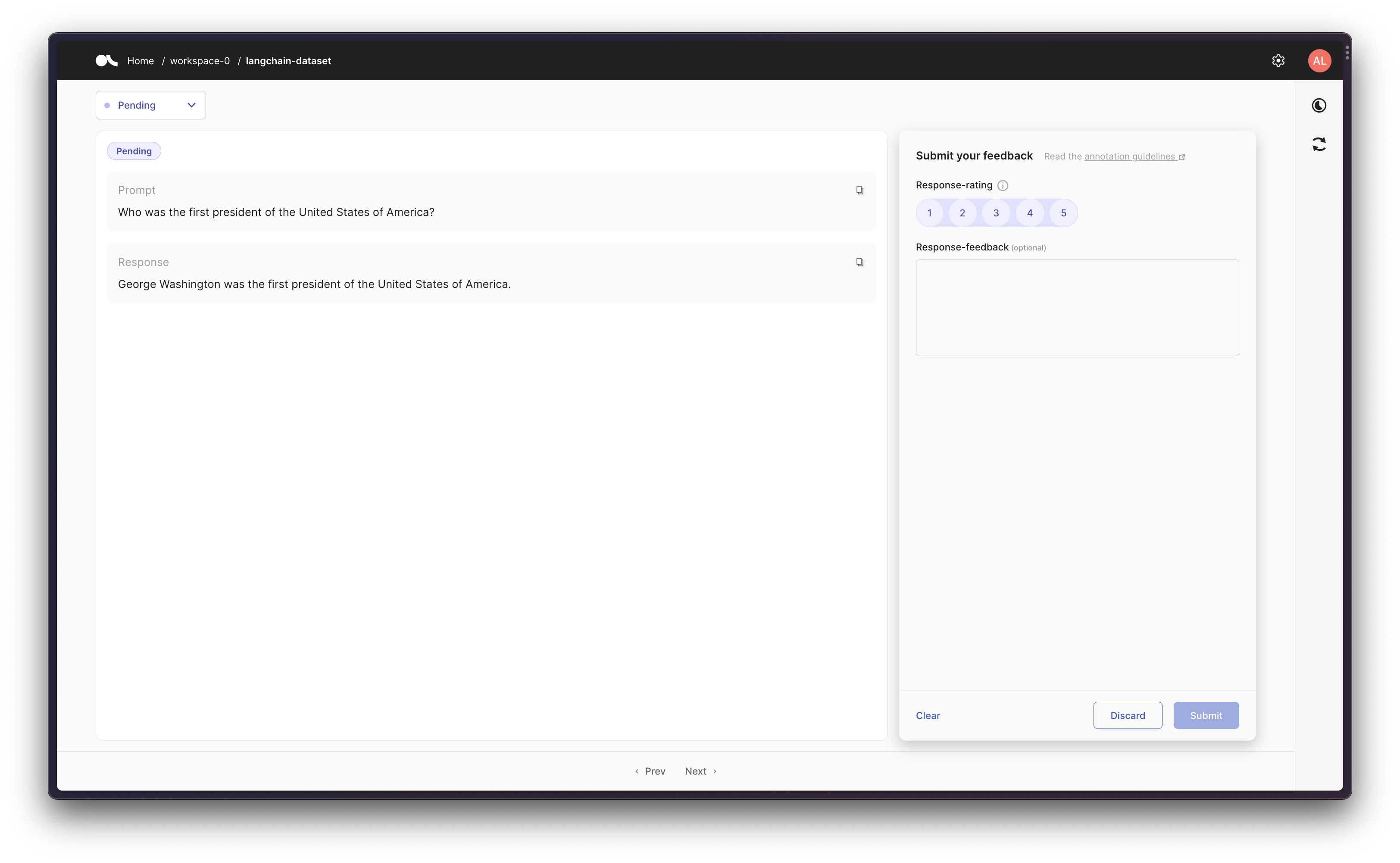

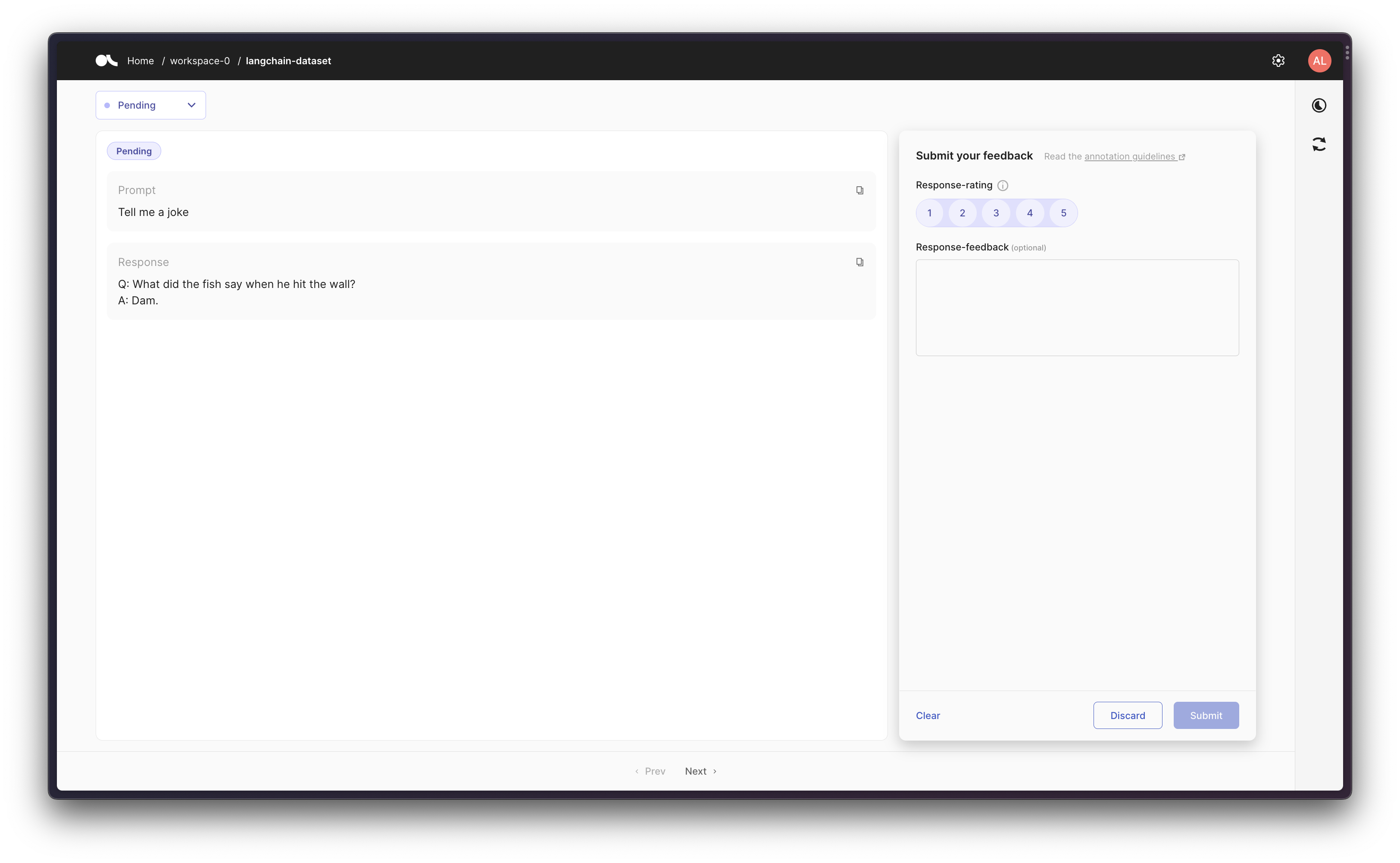

Scenario 1: Tracking an LLM#

First, let’s just run a single LLM a few times and capture the resulting prompt-response pairs in Argilla.

from langchain.callbacks import ArgillaCallbackHandler, StdOutCallbackHandler

from langchain.llms import OpenAI

argilla_callback = ArgillaCallbackHandler(

dataset_name="langchain-dataset",

api_url="...",

api_key="...",

)

llm = OpenAI(temperature=0.9, callbacks=[argilla_callback])

llm.generate(["Tell me a joke", "Tell me a poem"] * 3)

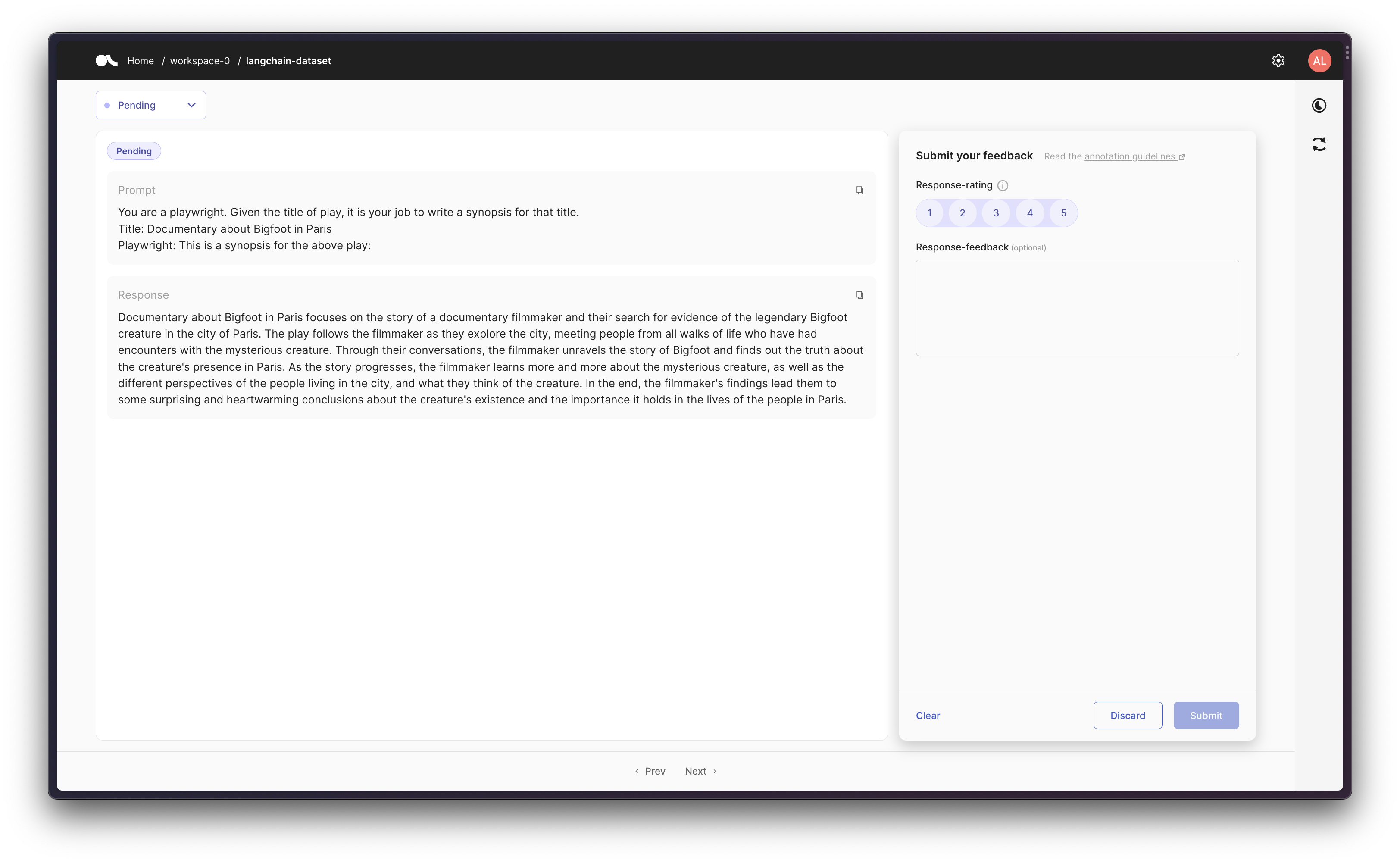

Scenario 2: Tracking an LLM in a chain#

Then we can create a chain using a prompt template, and then track the initial prompt and the final response in Argilla.

from langchain.callbacks import ArgillaCallbackHandler, StdOutCallbackHandler

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

argilla_callback = ArgillaCallbackHandler(

dataset_name="langchain-dataset",

api_url="...",

api_key="...",

)

llm = OpenAI(temperature=0.9, callbacks=[argilla_callback])

template = """You are a playwright. Given the title of play, it is your job to write a synopsis for that title.

Title: {title}

Playwright: This is a synopsis for the above play:"""

prompt_template = PromptTemplate(input_variables=["title"], template=template)

synopsis_chain = LLMChain(llm=llm, prompt=prompt_template, callbacks=[argilla_callback])

test_prompts = [{"title": "Documentary about Bigfoot in Paris"}]

synopsis_chain.apply(test_prompts)

Scenario 3: Using an Agent with Tools#

Finally, as a more advanced workflow, you can create an agent that uses some tools. So that ArgillaCallbackHandler will keep track of the input and the output, but not about the intermediate steps/thoughts, so that given a prompt we log the original prompt and the final response to that given prompt.

Note that for this scenario we’ll be using Google Search API (Serp API) so you will need to both install

google-search-resultsaspip install google-search-results, and to set the Serp API Key asos.environ["SERPAPI_API_KEY"] = "..."(you can find it at https://serpapi.com/dashboard), otherwise the example below won’t work.

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain.callbacks import ArgillaCallbackHandler, StdOutCallbackHandler

from langchain.llms import OpenAI

argilla_callback = ArgillaCallbackHandler(

dataset_name="langchain-dataset",

api_url="...",

api_key="...",

)

llm = OpenAI(temperature=0.9, callbacks=[argilla_callback])

tools = load_tools(["serpapi"], llm=llm, callbacks=[argilla_callback])

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

callbacks=[argilla_callback],

)

agent.run("Who was the first president of the United States of America?")